Honors Thesis 2024 - Zinc Zhao

Impact of Data Analysis in Nascent NLP Tasks

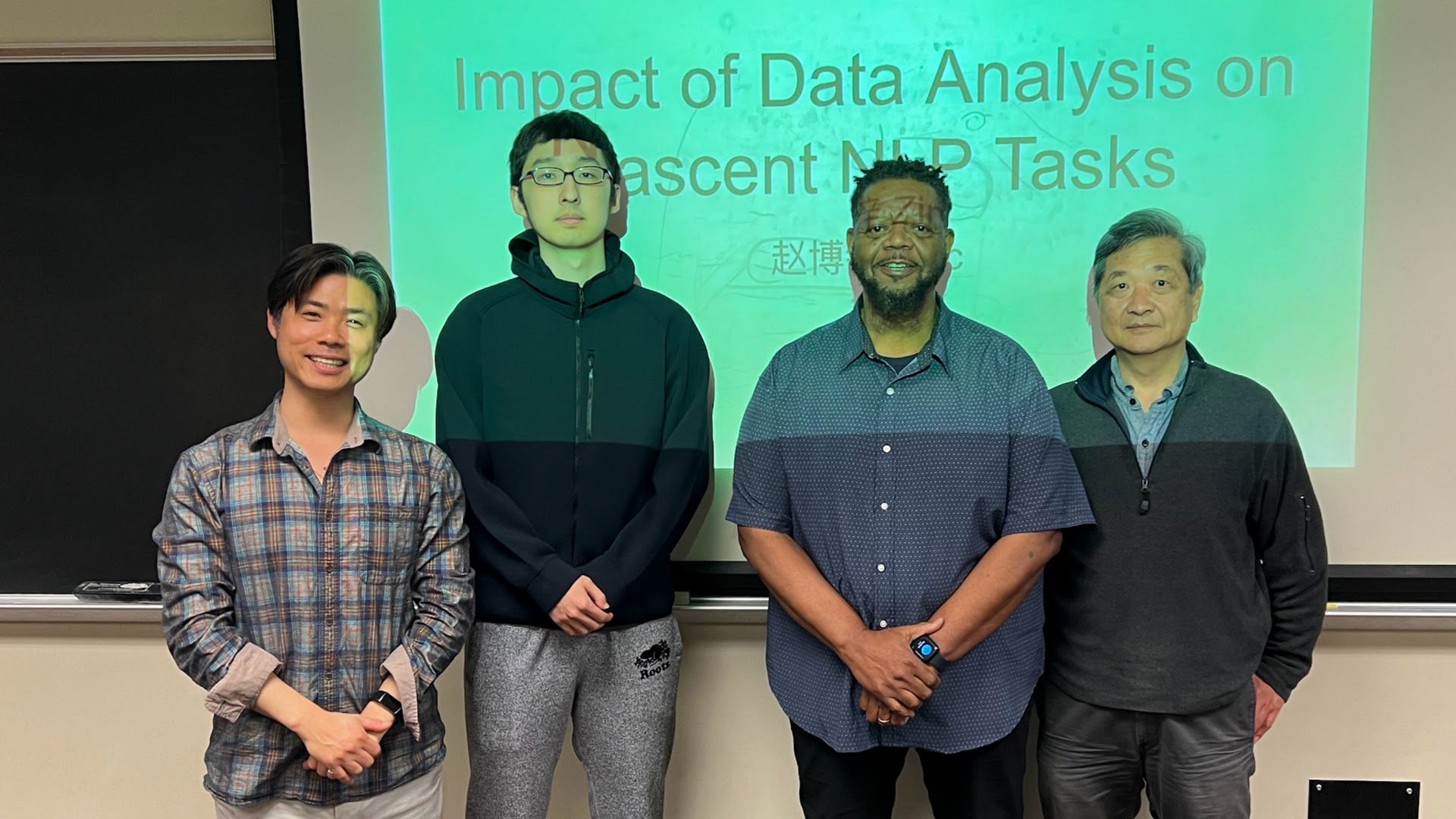

Zinc Zhao

Highest Honor in Computer Science

Abstract

Understanding the importance of data analysis is essential for Natural Language Processing (NLP) research. While it is widely recognized that the better the data quality, the better the model performance, little to no effort has been made on quantifying the impact of data analysis in NLP research. For nascent NLP tasks, this judgement is even harder. This thesis presents a study of the influence of noisy dataset, falsely-targeted dataset, and existing unsuitale dataset on model performance and the impact that data analysis could make on these three types of incompetent datasets, respectively. Through fixing the noise labels in a noisy dataset, we have improved the model performance from 69% to 75% with the model structure unchanged; through re-pointing the falsely-targeted dataset to the application scenario, we worked out a deploy-able version of the model; and through creating a new dataset spanning over 1,000 application scenarios, the model trained on our dataset outperforms models trained on other datasets and zero-shot GPT. Our work has shown that data analysis could have a significant impact on nascent NLP tasks for all kinds of NLP data.

Department / School

Computer Science / Emory University

Degree / Year

BS / Spring 2024

Committee

Jinho D. Choi, Computer Science and QTM, Emory University (Chair)

Shun Yan Cheung, Computer Science, Emory University

Gary Motley, Music, Emory University

Links

Anthology | Paper | Presentation

Jinho Choi, Zinc Zhao, Gary Motley, Shun Yan Cheung