Honors Thesis 2019 - Xinyi Jiang

Incremental Sense Weight Training for Contextualized Word Embedding Interpretation

Xinyi Jiang

Highest Honor in Computer Science

Abstract

In this work, we propose a new training procedure for learning the importance of dimensions of word embeddings in representing word meanings. Our algorithm advanced in the interpretation filed of word embeddings, which are extremely critical in the NLP filed due to the lack of understanding of word embeddings despite their superior ability in progressing NLP tasks. Although previous work has investigated in the interpretability of word embeddings through imparting interpretability to the embedding training models or through post-processing procedures of pre-trained embeddings, our algorithm proposes a new perspective to word embedding dimension interpretation where each dimension gets evaluated and can be visualized. Also, our algorithm adheres to a novel assumption that not all dimensions are necessary for representing a word sense (word meaning) and dimensions that are negligible get discarded, which have not been attempted in previous studies.

Department / School

Computer Science / Emory University

Degree / Year

BS / Spring 2019

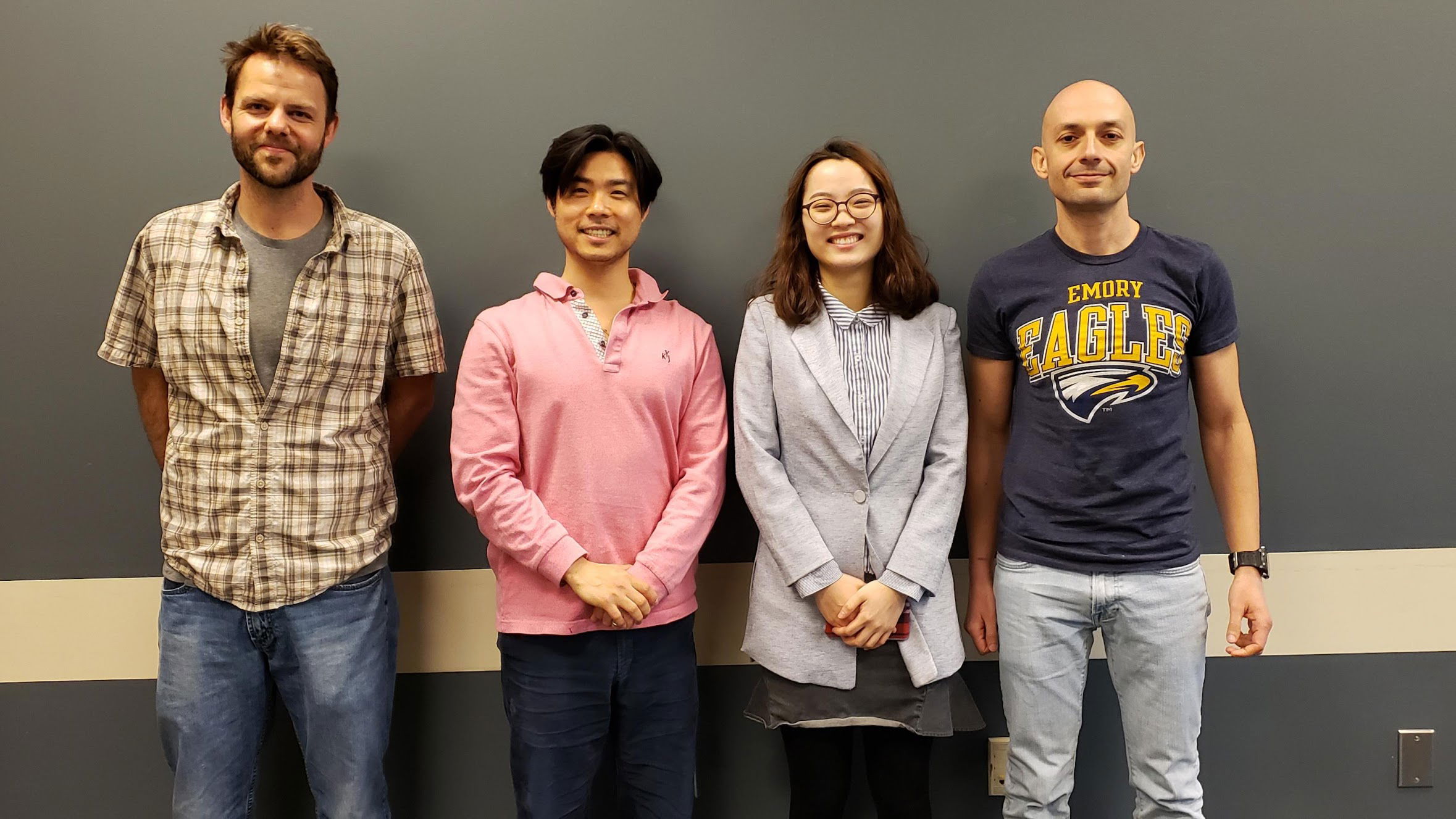

Committee

Jinho D. Choi, Computer Science and QTM, Emory University (Chair)

Davide Fossati, Computer Science, Emory University

Justin Burton, Physics, Emory University

Links

Anthology | Paper | Presentation